Good Move

Bad Move

Tetris is a classic tile matching video game created in 1984 where geometric shapes composed of four square blocks known as tetriminos fall into a 10x20 playing field grid.

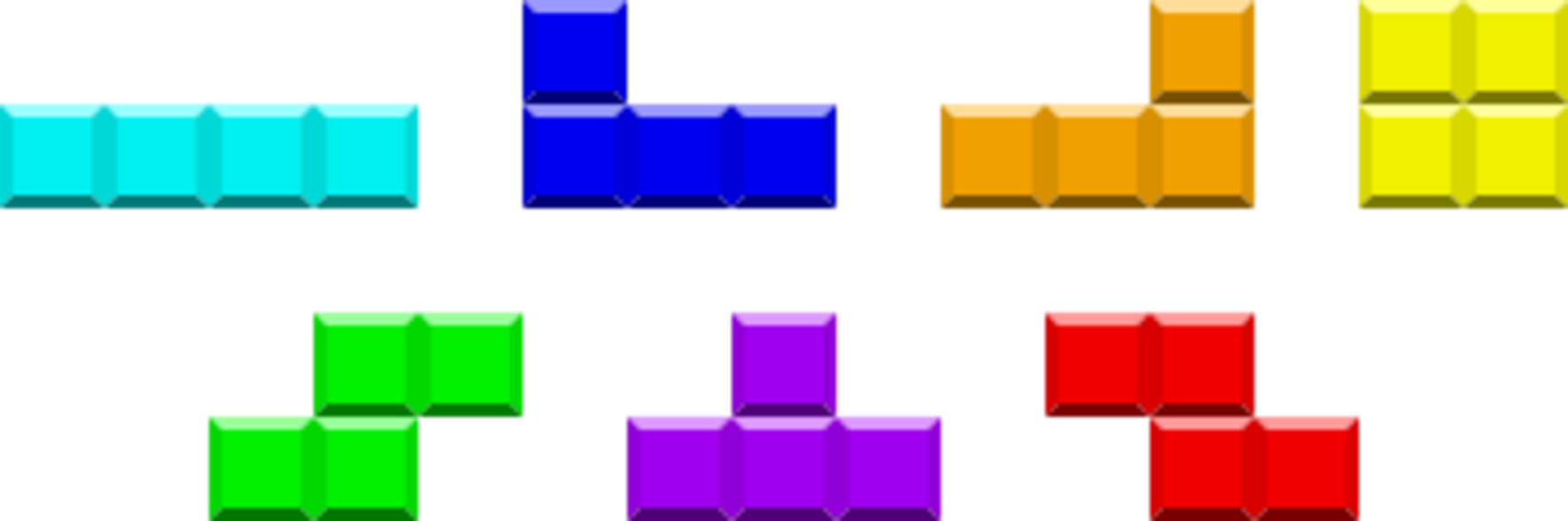

There are 7 different types of tetrominos, detailed below. Players must rotate and move these blocks to complete a line. When a full line is complete (no gaps), it disappears and any blocks above get shifted down. The game ends when the stack of tetriminos reaches the top of the playing field without being cleared. Clearing more than one line with a single tetromino gives you more points, so to play the game optimally you should be trying to set up 4x clears, called a 'Tetris'

Our AI model is designed to find the best move in any given board and with any given active tetromino.

Good moves include clearing lines, minimising holes and opening opportunities for combo line clears.

An advanced 'Good move' for our AI would be setting up tetris combos, gaining maximum points in the long run by sacrificing some points which could be gained earlier by completing rows 1 by 1.

Bad Moves create 'gaps' (holes where pieces cannot be placed) and 'towers' (stacking up without completing rows).

There are 7 tetriminos in tetris. Each denoted by the letter they most resemble. These are [I,J,L,O,S,T,Z].

Our AI (the Tetris Master 3000) uses DQN and reinforcment learning to train our neural network model. Below you can see our AI Training on the left side and playing on the right.

Our objective is for a given state, calculate the expected final reward. The game is run by providing the AI with all possible final states for the next block, and then the best state is chosen by the AI.

The benefits of using such a model, instead of calculating specific moves:

A benefit of our AI being provided 'metadata' about the game board is we are able to change the size of the tetris game and using the same model to achieve similar excellent results. The video below shows our AI playing on a 30 x 20 tetris board, using a model trained exclusively on a 10 x 20 board.

The Tetris Master 3000 depicted in a human form playing a version of Tetris where lines do not clear. The Tetris Master 3000 uses DQN and Reinforcement learning to play tetris (Now better than its human developers!)